testing

5 stars

I did read this book, and it was very good, however this is primarily a test post

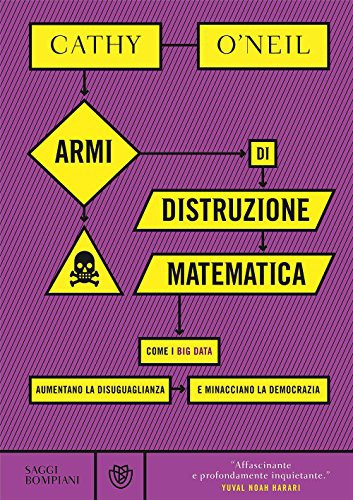

Come i big data aumentano la disuguaglianza e minacciano la democrazia

Hardcover, 368 pages

Italiano language

Published Sept. 5, 2017 by Bompiani.

Lungi dall'essere modelli matematici oggettivi e trasparenti, gli algoritmi che ormai dominano la nostra quotidianità iperconnessa sono spesso vere e proprie "armi di distruzione matematica": non tengono conto di variabili fondamentali, incorporano pregiudizi e se sbagliano non offrono possibilità di appello. Queste armi pericolose giudicano insegnanti e studenti, vagliano curricula, stabiliscono se concedere o negare prestiti, valutano l'operato dei lavoratori, influenzano gli elettori, monitorano la nostra salute. Basandosi su case studies nei campi più disparati ma che appartengono alla vita di ognuno di noi, O'Neil espone i rischi della discriminazione algoritmica a favore di modelli matematici più equi ed etici. Perché rivestire i pregiudizi di un'apparenza statistica non li rende meno pregiudizi.

Lungi dall'essere modelli matematici oggettivi e trasparenti, gli algoritmi che ormai dominano la nostra quotidianità iperconnessa sono spesso vere e proprie "armi di distruzione matematica": non tengono conto di variabili fondamentali, incorporano pregiudizi e se sbagliano non offrono possibilità di appello. Queste armi pericolose giudicano insegnanti e studenti, vagliano curricula, stabiliscono se concedere o negare prestiti, valutano l'operato dei lavoratori, influenzano gli elettori, monitorano la nostra salute. Basandosi su case studies nei campi più disparati ma che appartengono alla vita di ognuno di noi, O'Neil espone i rischi della discriminazione algoritmica a favore di modelli matematici più equi ed etici. Perché rivestire i pregiudizi di un'apparenza statistica non li rende meno pregiudizi.

I did read this book, and it was very good, however this is primarily a test post

This was an exceptional book. It's not heavy into statistics but gives the rationale for what is a WMD (Weapon of Math Destruction) and WMDs maybe a new term but we have been under the exploitation of WMDs well before we think. It's not a new phenomenon but it is one that we should be aware of.

Take a read and learn how about them so that we can all do better to combat them and use math to not only help describe the world but make it a better place to live in.

This was an exceptional book. It's not heavy into statistics but gives the rationale for what is a WMD (Weapon of Math Destruction) and WMDs maybe a new term but we have been under the exploitation of WMDs well before we think. It's not a new phenomenon but it is one that we should be aware of.

Take a read and learn how about them so that we can all do better to combat them and use math to not only help describe the world but make it a better place to live in.

A very timely, detailed, and expert (yet highly readable) look at the damaging potentials of using algorithms to regulate our lives. This absolutely relevant book is a must-read for anyone involved in data science or simply algorithm users.

Using a clear definition of what constitutes WMDs, (opacity, negative feedback loops, no absence of feedback, they tend to punish the poor, model = black box). O'Neil goes through a wide range of social institutions (health, education, work, politics, criminal justice, to name a few) and examines the damages done by WMDs. My own personal distaste for work wellness stuff felt very validated.

In the end, O'Neil offers suggestions as to what can be done to "tame" WMDs and reduce their damage (including trading some efficiency for fairness), or better, use the same techniques made available thanks to Big Data for socially productive purposes.

A very timely, detailed, and expert (yet highly readable) look at the damaging potentials of using algorithms to regulate our lives. This absolutely relevant book is a must-read for anyone involved in data science or simply algorithm users.

Using a clear definition of what constitutes WMDs, (opacity, negative feedback loops, no absence of feedback, they tend to punish the poor, model = black box). O'Neil goes through a wide range of social institutions (health, education, work, politics, criminal justice, to name a few) and examines the damages done by WMDs. My own personal distaste for work wellness stuff felt very validated.

In the end, O'Neil offers suggestions as to what can be done to "tame" WMDs and reduce their damage (including trading some efficiency for fairness), or better, use the same techniques made available thanks to Big Data for socially productive purposes.

One misconception you might have right now is the objective nature of computer algorithms. Cathy O’Neil’s Weapons of Math Destruction first shows you the pinnacle of algorithm objectivity: baseball. Baseball math and algorithms are transparent, measure the event, and are responsive to feedback.

Then, O’Neil pans the camera away to the horror of algorithms that are opaque, rely on proxies, and rarely incorporate feedback: education, finance, mortgages, predictive policing, recidivism, and insurance.

Computer algorithms do not turn their inputs into objective facts. Computer algorithms amplify the biases of the programmer and the dataset. We need to develop a societal understanding of the tools that automate our lives or we’ll forever be manipulated by them.

“Late at night, a police officer finds a drunk man crawling around on his hands and knees under a streetlight. The drunk man tells the officer he’s looking for his wallet. When the officer asks if …

One misconception you might have right now is the objective nature of computer algorithms. Cathy O’Neil’s Weapons of Math Destruction first shows you the pinnacle of algorithm objectivity: baseball. Baseball math and algorithms are transparent, measure the event, and are responsive to feedback.

Then, O’Neil pans the camera away to the horror of algorithms that are opaque, rely on proxies, and rarely incorporate feedback: education, finance, mortgages, predictive policing, recidivism, and insurance.

Computer algorithms do not turn their inputs into objective facts. Computer algorithms amplify the biases of the programmer and the dataset. We need to develop a societal understanding of the tools that automate our lives or we’ll forever be manipulated by them.

“Late at night, a police officer finds a drunk man crawling around on his hands and knees under a streetlight. The drunk man tells the officer he’s looking for his wallet. When the officer asks if he’s sure this is where he dropped the wallet, the man replies that he thinks he more likely dropped it across the street. Then why are you looking over here? the befuddled officer asks. Because the light’s better here, explains the drunk man” (Source: these exact words have 88 Google results).

Weapons of Math Destruction: slpl.bibliocommons.com/item/show/1357767116